-

Troubleshooting RSpec load time

by Jane Sandberg

In one of our applications, we found that tests were taking quite some time to load. For example, when running a relatively quick spec file, we got output like this:

bundle exec rspec spec/models/user_spec.rb User #catalog_admin? identifies non-administrator users with an administrator identifies administrator users using the UID .from_cas finds or creates user in the database Finished in 0.16932 seconds (files took 7.99 seconds to load) 3 examples, 0 failuresThe tests themselves only took a fraction of a second, but the files took 8 seconds to load. While not the end of the world, this did affect test-driven development, because even very small changes still required that an annoying wait before you could see a passing or failing test.

I felt that I got into a good rhythm troubleshooting this load time, and it helped to identify two low-hanging fruit improvements, so I wanted to share my process.

-

Migrating to Blacklight’s Built-in Advanced Search

by Christina Chortaria, Regine Heberlein, Max Kadel, Ryan Laddusaw, Kevin Reiss, and Jane Sandberg

As part of migrating our catalog from Blacklight 7 to Blacklight 8, we made the decision to move away from the blacklight_advanced_search gem in favor of the built-in advanced search features that come with modern versions of Blacklight. This has been a long (18 months!) process, filled with roadblocks and competing priorities. While it took longer than we expected, we were able to do this migration in small incremental steps that

- migrated our catalog’s two advanced search forms to use Blacklight’s built-in advanced search features,

- allowed us to set aside the work as needed and come back to it easily months later,

- kept our catalog working and deployable throughout the process,

- could be easily rolled back in case of a problem, and

- didn’t require the use of long-running (and frequently rebased) git branches.

This post describes our process, in the hopes that it is useful to other Blacklight users who wish to implement the built-in advanced search, or others who are interested in moving away from a tightly coupled third-party dependency.

-

How to Set Up Streaming Replication (aka Warm Standbys)

by Alicia Cozine, Anna Headley, Francis Kayiwa, and Trey Pendragon

In our previous post we described how to set up logical replication and use it as a migration strategy between different versions of PostgreSQL. Here we’ll describe how to set up streaming replication, for use as an always-up-to-date failover copy.

Requirements

Two identical PostgreSQL 15 machines, named here as

leaderandfollowerSteps

- Ensure PostgreSQL 15 is installed on both machines.

-

Migrating to PostgreSQL 15 with Logical Replication

by Alicia Cozine, Anna Headley, Francis Kayiwa, and Trey Pendragon

In our previous post, we described how we decided to migrate a very large database using logical replication. In this post we will detail the steps we followed to make it happen.

How to Set Up Logical Replication

Before the migration really began, we set up logical replication. This recreated our very large database in an upgraded version of PostgreSQL.

To set up logical replication, we started with the AWS Database Migration Guide, then expanded and adapted the steps to our local environment.

Here’s how we did it:

First set up the publisher.

- Ensure the source system (the publisher) can accept requests from the target system (the subscriber): edit the pg_hba.conf file on the source system (for us it was our old PostgreSQL 10 machine). Edit ‘/etc/postgresql/

/main/pg_hba.conf': host all all <IP_of_subscriber/32> md5

- Ensure the source system (the publisher) can accept requests from the target system (the subscriber): edit the pg_hba.conf file on the source system (for us it was our old PostgreSQL 10 machine). Edit ‘/etc/postgresql/

-

Reflections from All Things Open

by Bess Sadler

All Things Open is an annual conference “focused on the tools, processes, and people making open source possible.” This was my second time attending, but the first time I’ve gone in many years. I found it to be a valuable conference, and after years of attending mostly only repository or library technology conferences, it was exciting to get exposed to other industries and to see where the overlaps are between commercial open source software and the smaller scope of digital library development that I’m used to.

A huge thank you to All Things Open and Google for providing free passes to everyone in Princeton University Library’s Early Career Fellowship.

AI Was Everywhere

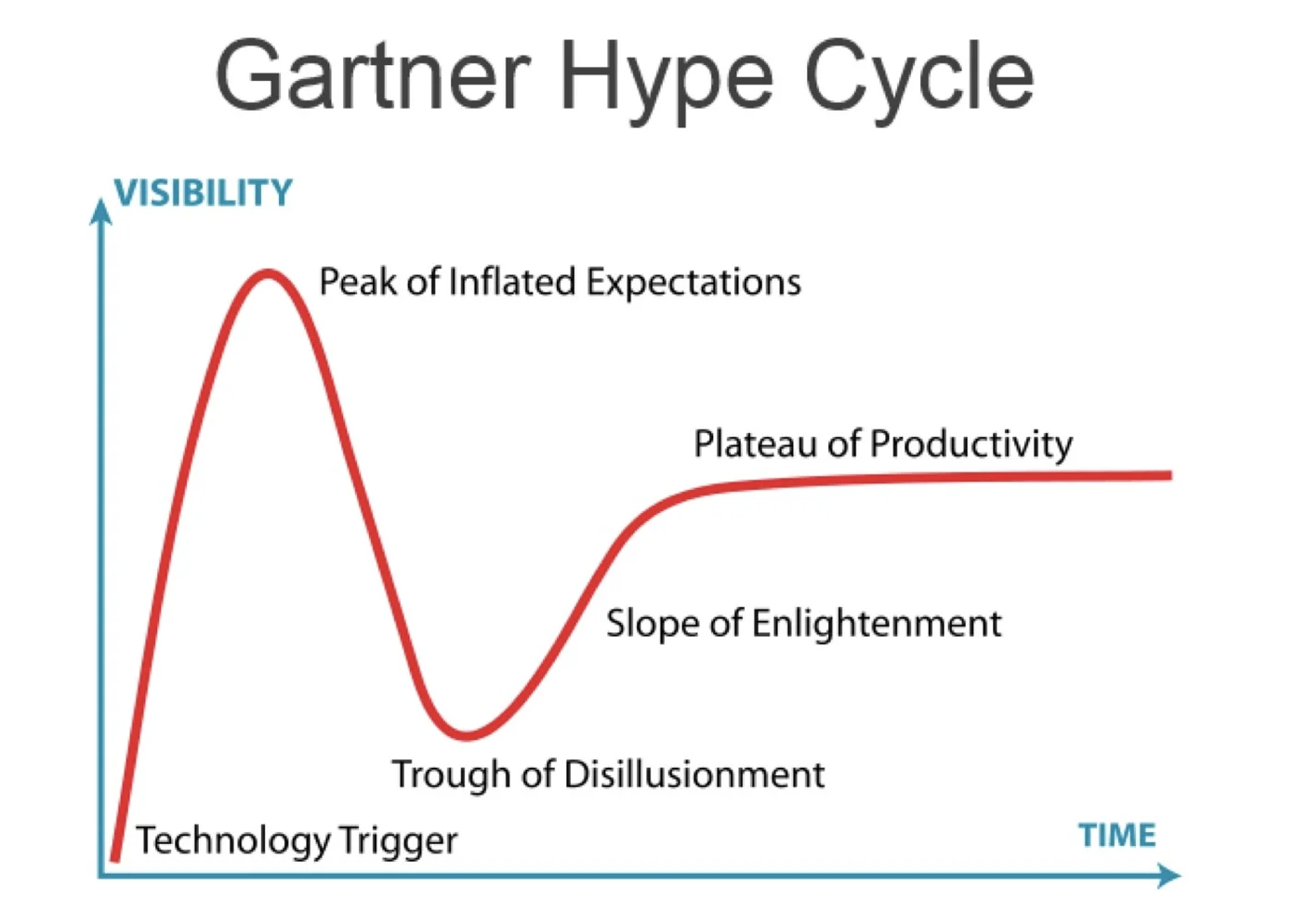

At the conference, AI seemed to be everywhere. Hardly a surprise, since the leaps in functionality demonstrated in the past year by large language models (LLMs) have astonished people so much that at least a few folks seem to have abandoned common sense. I’ve heard a lot of what seems to be overinflated hype on the subject, and it was refreshing when one of the keynote speakers, Lawrence Moroney (AI Advocacy Lead @ Google) contextualized this with an overview of the Gartner Hype Cycle.

-

Migrating PostgreSQL via Replication

by Alicia Cozine, Anna Headley, Francis Kayiwa, and Trey Pendragon

The Database in Question

Our digital repository application, fondly known as Figgy, has a database that’s 200GB. 99% of the data is in one giant table in a metadata (JSON-B) column. The database is indexed in Solr, and reindexing takes at least 16 hours. Before our adventure began, the database lived in a Postgres 10 instance on an old physical server. The hardware and software were all end-of-life (EOL). We needed to migrate it. But how?

The Process

We wanted to approach the migration in a cross-team, low-stress way. We chartered a PostgreSQL book club with two rules: meet for one hour each week, and no work outside the meeting (“no homework”). We wanted to answer a lot of questions about postgresql, but we needed to migrate the Figgy database first.

Options & Decision

First we started to build a copy of our production infrastructure on Postgres 10, so that we could carefully try out different migration strategies to move fully from 10 to 15. This turned out to be difficult. All the versions were so old - we would have needed EOL Ubuntu servers to build the EOL Postgres versions, which seemed like wasted effort.

-

Accessible Mermaid charts in Github Markdown

by Christina Chortaria, Isha Sinha, Regine Heberlein, and Jane Sandberg

After we saw Github’s announcement about including Mermaid diagrams in markdown files, we started using them extensively! We use these diagrams to confirm understanding with stakeholders, keep our diagrams up-to-date and under source control, and even plan work cycles. Our colleague, Carolyn Cole, gave an excellent talk at RubyConf on how we use Mermaid charts [youtube video with English captions].

As with any complex diagrams, though, it takes some planning to make these charts accessible. If your team doesn’t have the capacity to implement the following pieces, your team may not be ready to use this integration.

Checklist

Every mermaid chart in github markdown should have the following:

- An accessible name, provided by a

titlein the front matter or some other way. - A detailed text description, either outside the chart, or within it and identified by the

accDescrkeyword. - No

themespecified in the%%{init}%%block (otherwise, the chart may be unreadable in dark mode). - If

themeVariablesare specified in the%%{init}%%block, ensure the results have sufficient contrast when dark mode is turned off or on. - If you include multiple mermaid charts in the same page, confirm that they are integrated into a meaningful heading structure.

- An accessible name, provided by a

-

Moving Rails Apps off of Webpacker

by Anna Headley, Carolyn Cole, Eliot Jordan, Jane Sandberg

It is thankfully time to excise webpacker from all our Rails applications, and there are a lot of options for which tools to adopt.

For more background, see

- DHH’s summary of JavaScript in Rails 7

- The Rails 7 release announcement

- Rails Guides on Working with Javascript and the Asset Pipeline

Our Rails apps at PUL are not running on Rails 7 yet but we have been moving off of webpacker in preparation for this upgrade, and because webpacker is no longer supported. A number of us have tried a variety of options on a variety of apps, and we summarize our experiments and reflections here.

-

Configuring blacklight_dynamic_sitemap

by Bess Sadler

For pdc_discovery, a blacklight application at Princeton to improve findability for open access data sets, we want to publish a sitemap so that search engine crawlers can more easily index our content. Orangelight, Princeton’s library catalog, which is also a Blacklight application, uses an older system, blacklight-sitemap. However, the

blacklight-sitemapgem hasn’t been updated in awhile, and using rake tasks to re-generate very large sitemaps is less than ideal because it takes time and the sitemaps become stale quickly. Given these drawbacks to our existing approach, I was excited to try the more recent solution in use at Stanford and Penn State (among others): blacklight_dynamic_sitemap. -

Rails App Deployed to Subdirectory Behind a Load Balancer

by Bess Sadler

You know that sinking feeling you get when you’ve been working on an application for months, you’re about to launch it, and then a new requirement appears at the last minute? It’s been one of those weeks. However, after a couple of days of head scratching, I’m happy to report that we just might keep our launch schedule after all.

The requirement in question shouldn’t be hard. We need to take PDC Discovery, PUL’s new research data discovery application, and serve it out at a subdirectory. So, instead of delivering it at

https://pdc-discovery-prod.princeton.edu(which we always knew was a placeholder), it needs to appear athttps://datacommons.princeton.edu/discovery/. That/discoveryat the end is the tricky bit. -

Preservation Packaging

by Esmé Cowles

As we are implementing preservation in Figgy, we have faced a couple of challenging decisions that lay just outside the concern of preservation-oriented specifications like BagIt and OCFL. Two inter-related questions that took us a lot of discussion and exploration to reach consensus on are:

- What is the best unit of preservation?

- Should preservation packages be compressed and/or archived?

-

Preservation

by Trey Pendragon

Lately we’ve been working on preservation within Figgy and have come up with a strategy we think works for us. However, in doing so, we’ve evaluated a few options and come up with some assumptions which could be useful to others.

-

Valkyrie, Reimagining the Samvera Community

by Esmé Cowles

I generally don’t find looking at slides to be a good substitute for watching a talk, and I’d rather read a text version than watch a video, so I thought I’d write up a text version of my talk at Open Repositories.

-

Valkyrie

We have been working on Plum for the last two years and in that time we have constantly struggled with performance for bulk loading, editing, and data migration. We have fixed some problems, and found ways to work around others by refactoring and/or disabling parts of the Hyrax stack. However, our performance is still not acceptable for production. For example, when we edit large books (500+ pages), the time to save grows linearly with the number of pages, climbing to a minute or more. Bulk operations on a few hundred objects can take several hours. We want a platform with robust, scalable support for complex objects, especially large or complicated ones that cannot be served by existing platforms. We have a number of books with more than 1000 pages, and book sets with dozens of volumes, and we need to support their ingest, maintenance, access, and preservation.